Twitter investigates racial bias in image previews

Twitter is investigating after users discovered its picture-cropping algorithm sometimes prefers white faces to black ones.

Users noticed when two photos - one of a black face the other of a white one - were in the same post, Twitter often showed only the white face on mobile.

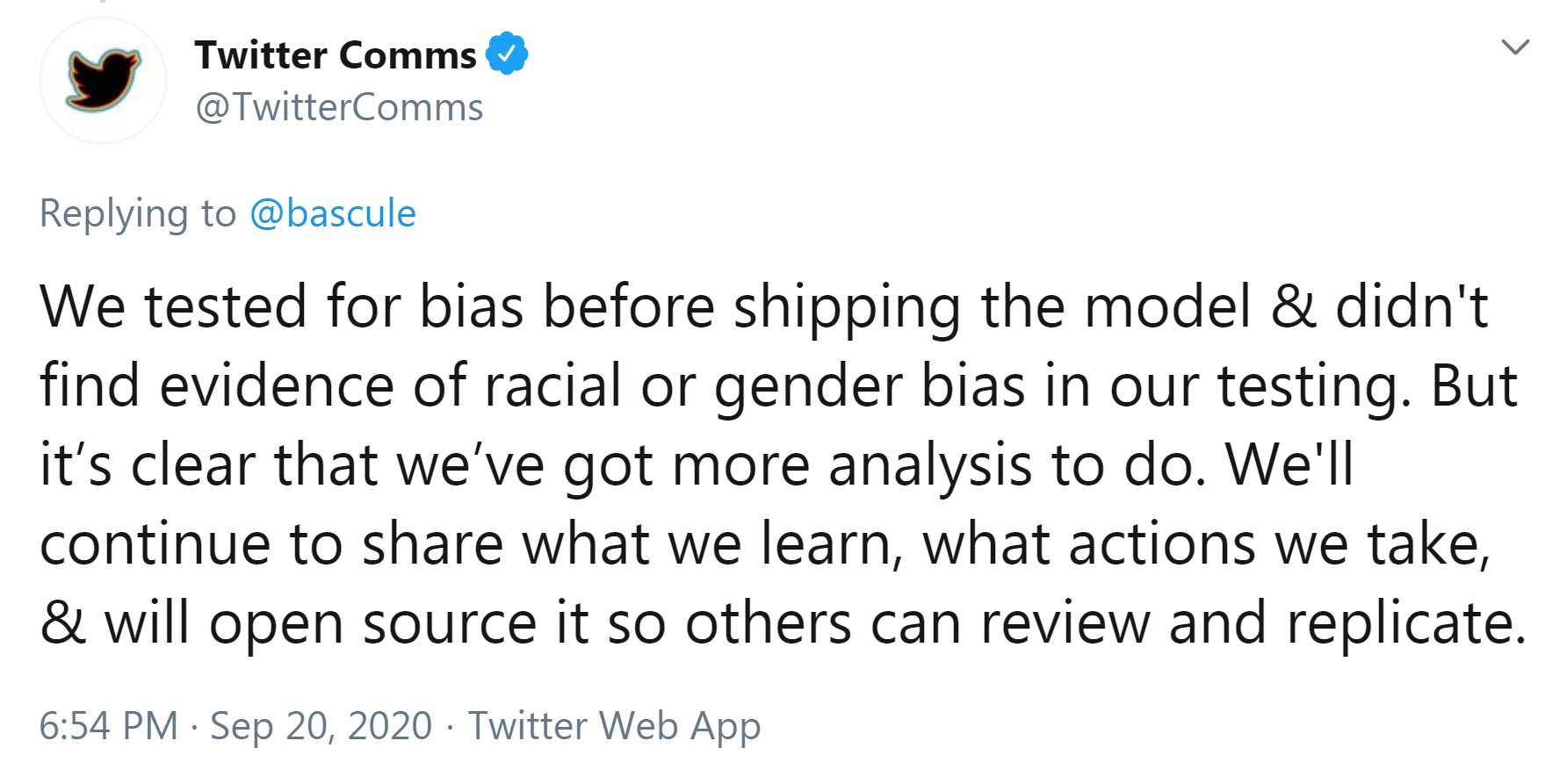

Twitter said it had tested for racial and gender bias during the algorithm's development.

But it added: "It's clear that we've got more analysis to do."

Twitter's chief technology officer, Parag Agrawal, tweeted: "We did analysis on our model when we shipped it - but [it] needs continuous improvement.

"Love this public, open, and rigorous test - and eager to learn from this."

Facial hair

The latest controversy began when university manager Colin Madland, from Vancouver, was troubleshooting a colleague's head vanishing when using videoconference app Zoom.

The software was apparently mistakenly identifying the black man's head as part of the background and removing it.

His discovery prompted a range of other experiments by users, which, for example, suggested:

Responding to criticism, he tweeted: "I know you think it's fun to dunk on me - but I'm as irritated about this as everyone else. However, I'm in a position to fix it and I will.

"It's 100% our fault. No-one should say otherwise."

'Many questions'

Zehan Wang, a research engineering lead and co-founder of the neural networks company Magic Pony, which has been acquired by Twitter, said tests on the algorithm in 2017, using pairs of faces belonging to different ethnicities, had found "no significant bias between ethnicities (or genders)" - but Twitter would now review that study.

"There are many questions that will need time to dig into," he said.

"More details will be shared after internal teams have had a chance to look at it."

Related Topics

- Racism

- Artificial intelligence

- Facial recognition

tinyurlis.gdu.nuclck.ruulvis.netshrtco.de